Recently I’m looking at writing some simple games for mobiles. After extensive research I’ve settled on using a framework called Phaser – it is actively supported and it uses HTML5’s webgl / canvas – i.e. it falls back to canvas if webgl is not supported on the device. This is great especially given that Windows Phone have just announced support for these elements, with iOS and Android already having them.

My biggest concern is working on a larger project that uses javascript – you don’t get any of the cool features of Visual Studio. Actually most people recommend simple text editors for javascript. This means no F12, no Find all References, not to mention that object oriented programming in javascript is horrible. So – Typescript to the rescue! If you don’t know what Typescript is I highly recommend watching a presentation by Anders Hejlsberg, the creator of C#. It is a superset of javascript that looks a bit more like C# (you get classes, inheritance, visibility modifiers and even generics and C#-style lambdas, plus an inbuilt module manager that looks like namespaces). It compiles to javascript and you package and send the javascript directly to the users.

I am not going to post the actual tutorial – I followed the excellent Flappy Birds with Phaser tutorial by Jeremy Dowell – a great way to learn Phaser and 2D game programming concepts. I then rewritten the game with Typescript (with a few minor changes). Note that I do not own any of the images, sounds etc., this is sample code and this is a personal project. I’d also like to praise the Phaser team who have accepted all the bugs I’ve logged (minor typescript mapping issues) and fixed them in a matter of hours (wow!).

You will need Visual Studio 2013 Update2 to run this game. Currently Update2 is not released, but you can download the RC (release candidate). Update2 includes Typescript so you don’t need to download any other extension. I am using IIS and have also configured Browser Link to speedup development. I find the code very easy to read (especially if you know C# / Java) and the static typing saved me countless times, not to mention that IntelliSense works. Sadly the sample does not work on IE (bummer, I really like their javascript debugging tool – F12) because IE does not support playing .wav sound files. I tested everything using Chrome. I also recommend you start by following the short “getting started” Phaser tutorial using Typescript that explains how to use Visual Studio.

So what do you get?

– Typescript (all the advantages of a strongly typed language invented by the creator of C#). This does not absolve you from having to read javascript, as you will still have to debug it.

– Visual Studio editor support (including IntelliSense, F12, Find all References etc.).

– some Visual Studio debugging (you can debug Typescript from VS as well as from the browsers but to be honest game frameworks are highly event driven and debugging screws up the timings so you’ll have to learn to rely on logging)

What’s not great ?

– The typescript mapping are not documented so if you hover over a method you do not get its documentation like you do in C#. I understand this is a hard problem and the Phaser team are looking into it but no progress so far. To workaround this you still have to consult the docs or to manually search into the phaser.js file;

– Slightly longer code – browser cycle because of the extra compilation step and occasional sync issues between VS and the browser

– IIS also introduces some small problems, chief among them security restrictions on certain file formats. The fix is trivial (add a line in your web.config) but

– my implementation still has a few bugs 🙂

The solution is shared via my OneDrive.

Take control of MSBuild using MSBuild API

When you press Build in Visual Studio, MSBuild is invoked and made to execute the task called Build. Clean – MSBuild executes the task Clean. And so on.

How do you think Visual Studio invokes MSBuild ? If you take a look on stackoverflow you will see the majority of answers point to using MSBuild command line interface. And for good reason, it is quite versatile – you can add different loggers, specify tasks, pass in parameters and so much more. So naturally, an easy fix is to create a simple process that executes a command line, and redirect the log to a file. This is all good and noble, but:

Using the MSBuild cli sometimes limits flexibility

You are basically doing inter process communication – wait for the cli to finish and read some file. What if you want to react sooner to some event, like cancel the build mid way because of some change? For example, if you press CTRL+Break in Visual Studio it cancels the build. In this article I will show you how Visual Studio communicates with MSBuild via the MSBuild API and solve an actual problem.

The problem

My goal is to go over hundreds of solutions (retrieved from sites like codeplex) and see if the Architecture > Dependency Diagram works for them. But first, I need to make sure they build. It can be done using the command line, but I want to call this verification from different threads and inter process communication would complicate things. Also, I dread parsing log files!

MSBuild API 4.0 in action

The actual solution is also used by Visual Studio, however I found that the MSBuild API is not advertised a lot. There were major changes between versions 3.5 and 4 of the .net framework, so a lot of blog posts are out of date.

Anyway, here we go. Add MSBuild API references (I overindulged here, you can get away with less)

Now let’s invoke MSBuild API to build a solution:

string projectFilePath = Path.Combine(@"D:\solutions\App\app.sln");

ProjectCollection pc = new ProjectCollection();

// there are a lot of properties here, these map to the msbuild CLI properties

Dictionary<string, string> GlobalProperty = new Dictionary<string, string>();

GlobalProperty.Add("Configuration", "Debug");

GlobalProperty.Add("Platform", "x86");

GlobalProperty.Add("OutputPath", @"D:\Output");

BuildParameters bp = new BuildParameters(pc);

BuildRequestData BuidlRequest = new BuildRequestData(projectFilePath, globalProperty, "4.0", new string[] { "Build" }, null);

// this is where the magic happens - in process MSBuild

BuildResult buildResult = BuildManager.DefaultBuildManager.Build(bp, BuidlRequest);

// a simple way to check the result

if (buildResult.OverallResult == BuildResultCode.Success)

{

//...

}

Get more out of MSBuild with a custom logger

My next goal is to capture some high level details about the projects built in case of build success and to log the errors in case the build fails. And I found this is quite easy to do using a custom logger. So here’s how to create your own logger:

private StringBuilder errorLog = new StringBuilder();

public string BuildErrors { get; private set; }

/// <summary>

/// This will gather info about the projects built

/// </summary>

public IList<string> BuildDetails { get; private set; }

/// <summary>

/// Initialize is guaranteed to be called by MSBuild at the start of the build

/// before any events are raised.

/// </summary>

public override void Initialize(IEventSource eventSource)

{

BuildDetails = new List<string>();

// For brevity, we'll only register for certain event types.

eventSource.ProjectStarted += new ProjectStartedEventHandler(eventSource_ProjectStarted);

eventSource.ErrorRaised += new BuildErrorEventHandler(eventSource_ErrorRaised);

}

void eventSource_ErrorRaised(object sender, BuildErrorEventArgs e)

{

// BuildErrorEventArgs adds LineNumber, ColumnNumber, File, amongst other parameters

string line = String.Format(": ERROR {0}({1},{2}): ", e.File, e.LineNumber, e.ColumnNumber);

errorLog.Append(line + e.Message);

}

void eventSource_ProjectStarted(object sender, ProjectStartedEventArgs e)

{

BuildDetails.Add(e.Message);

}

/// <summary>

/// Shutdown() is guaranteed to be called by MSBuild at the end of the build, after all

/// events have been raised.

/// </summary>

public override void Shutdown()

{

// Done logging, let go of the file

BuildErrors = errorLog.ToString();

}

Registering the log

Now that we created a custom logger, all we need to do is it pass it to MSBuild, like this:

BuildParameters bp = new BuildParameters(pc);

MsBuildMemoryLogger customLogger = new MsBuildMemoryLogger();

bp.Loggers = new List<ILogger>() { customLogger };

Resources

This MSDN page features the entire sample on how to build a custom logger.

I also found this blog that describes how to inject stuff into a csproj.

MsBuild cheat sheet

Target = the smallest unit of execution in msbuild

Examples use a target to print stuff to the console. Use msbuild myFile.proj /t:PrintStuff

Properties

These are your scalar variables. They behave in the same way as in imperative languages (C like ones at least).

<PropertyGroup> <Foo>Bar</Foo> </PropertyGroup> <Target Name="PrintStuff"> <Message Text="The value of Foo is: $(Foo)" /> <!-- this is how you access the var --> </Target>

All properties and items are parsed before targets are executed.

Env variables can be called in the same way.

To edit a property, redefine it inside a task.

Items

These are your arrays. They work best when holding file paths, since you get the * wildcard and the ** which means “all subdirectories”.

<ItemGroup> <MyFiles Include="pics/sunny.png;pics/rainy.png" /> <MyFiles Include="pics/others/car.png" /> <MyFiles Include="pics/**/*.png" /> <!-- use wildcards to define the same thing --> </ItemGroup> <Target Name="PrintStuff"> MyFiles has: @(MyFiles) /> <!-- all 3 elements will be here --> </Target>

Include is like the Add method in C#. You can also use Remove to, well.., remove an element. Makes sense since you can use them inside targets.

Custom metadata

These are your objects with a twist…

<ItemGroup> <Person Include="Person1" > <FName>Joe <Age>42</Age> </Person> <Person Include="Person2" > <FName>Mark</FName> <Age>37</Age> </Person> </ItemGroup> <Target Name="PrintStuff" Outputs="%(Person.Identity)"> <!-- this is executed in batches, i.e. a for loop is at the top--> <Message Text="We have these people: @(Person)" /> <Message Text="Details: %(Person.Fname) is %(Person.Age)" /> </Target>

Msbuild does give you some metadata to play with. Again this is oriented towards files. Details here. You can see that Identity is the value specified in the Include attribute of the item.

Transformations

<!-- define an item group named Src with some files and a property Dest for the destination dir --> <Target Name="CopyFiles"> <Copy SourceFiles="@(Src)" DestinationFiles="@(Src->'$(Dest)%(Filename)%(Extension)')" /> </Target>

The example is pretty self explanatory – the destination files is defined by transforming the Src item group. Filename and Extension are well-known metadata.

Optimizing your native program

This is an attempt at explaining some common tehniques to optimize native code. I find that the terminology used when talking about this is intimidating, so my article should make for an easy sunday evening read.

Inlining

Have the body of a method copied inside the method that it is called from:

public class Cpp

{

public void Foo()

{

int i = 10;

Bar(i);

}

public void Bar(int i)

{

std::out << i * i * i;

}

//after inlining

public void Foo()

{

int i = 10;

std::out << i * i * i;

}

}

In fact, in C++ you can use the keyword inline to tell the compiler it’s a good idea to do this since you expect the function to be called a lot. Of course, the compiler, being a disobedient beast that he is and knowing that there are a lot of stupid people out there, doesn’t have to listen to you. There’s quite a bit of theory behind when inlining is a good thing and when it goes bad, but suffice to say your best bet are small hot functions.

Partial inlining

This is the same as inlining but the compiler only copies a block of code instead of the entire function. Usually the block copied is a branch that is used frequently.

Virtual Call Speculation

Sounds fancy huh? Imagine the classical hierarchy Cat is an Animal and Dog is an Animal. The optimization would look like:

// --- before optimization --- // myAnimal.Eat() // --- after optimization --- // if (myAnimal is Cat) //call Cat directly if (myAnimal is Dog) //call Dog directly

Register Allocation

Well, there are only so many registers to go around (I’m talking about CPU registers not the Windows thing) . They are fast but not many … actually the fastest memory is a register because of its physical location inside the CPU. As such, there is a lot of rivalry for them (the fancy word is contention, but it sounds a bit disturbing). This can be solved by good old repurposing of the registry – i.e. save its value somewhere and use it for something hotter (again, I use temperature to depict usage). On a personal note, if you could do this manually, you should probably work with the windows kernel team (or whatever OS you fancy).

Basic Block or Function Layout

Change how the basic blocks of a function are layed out in memory and you will improve the cache efficiency. The key to this is to have a heat map – which blocks are hot (used frequently) and which are cold. Same things can be done at a function level.

Dead Code / Data Separation

Dead code is the code that is not hit during normal scenarios. This could be defensive programming calls ( if param is null then assert), code that is dead due to churn or code that is simply hit only by wacky testers. Moving this code to a separate memory location increases the density of hot code and it decreases the working set (i.e. the memory actually used by the program or to be more academic, those pages in memory recently used). Why? Because dead code would be put in some memory page and never get loaded.

Conditional Branch Optimization

Aparently some shapes of the switch statement are expensive. Imagine that case 6 is hotter than the rest. Then

// this is faster

if (i==6)

{

//...

}

else

{

switch (i)

{

case 1: //

case 2: //

}

}

Size / Speed Optimization

Programs can be optimized for either size or speed by a switch in the compiler. This is done by using either longer instruction sequences, which is faster but needs more memory or the opposite. But this type of optimization is per the entire program and you would get much better results if you could optimize:

- hot paths for speed

- slow paths for size

The grand finale?

In hope I shed some light of what really is just high density of fancy words, your next questions should be: how the hell do I do this and what speed up should I expect?

To answer in reverse order: about 20% when using the Profile Guided Optimizer (free, here). It works by drilling on profiling information, so you need to collect this information by running your most common scenarios. The MSDN article explains the whole process. Enjoy!

How to mock a [web] server – the easy way

Many a times we need isolation. Isolate your client app from the server – that will show the people who develop the server! Testing a client has one major head shake – how can one test the behavior without controlling the input data? Think accènts, empty titles, long titles, bad response format, 404, 504, 500, 502 etc. You could ask the people that maintain the server to drop the data you need, but that’s a dependency you can’t control nor bribe (well, sometimes you can bribe them with beer, but if they are married it won’t work often…).

So – mock that server!

You can argue that mocking the entire server is too much. Why not just mock the data layer accessing the network? Well, 3 reasons:

- the data layer can and will be changed a lot

- not all flows go through the same data layer, there’s always some authentication call that makes a weird detour

- you’ll be testing the real app, not a hacked and detoured version of it

Well, if you are still reading this, you might already know Fiddler. It’s the single most useful tool for client / server apps. Ever. It proxies the data transfers and matches the requests with the responses, it has visualizers for XML, HTML, images. Heck, it can even read HTTPS (really, it installs a fake certificate on your machine so it becomes a man in the middle). You can set breakpoints before a request or an answer. It has scripting support and an API in C#. It’s god’s gift to all testers! And, it’s free.

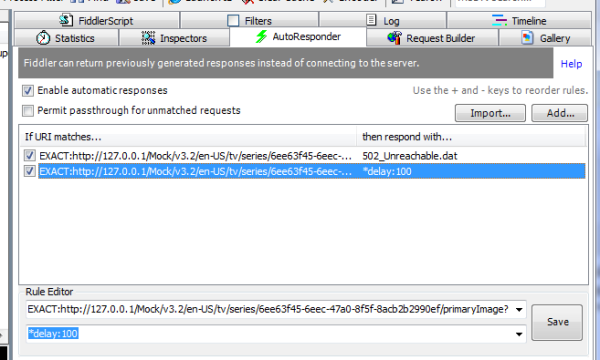

Well, Fiddler has a nice feature called AutoResponder:

The UI is pretty much self explanatory – you can build up a list of rules where a rule is (URI_TEMPLATE, ACTION_TO_PERFORM). You are free to use wildcards for templates. As with most access lists, rules are picked top to bottom. The AutoResponder has a feature to route URIs to the real server, acting as a, well, a router :). And the coolest of them all, you can send responses from a files, inject delays and web errors:

Oh, in case you are wondering, *bpu and *bpafter refer to breaking the flow before / after response / requests (it works like visual studio breakpoints so you can make manual modifications to the data). Fiddler is extendable so you can add more rules!

Conclusion

There you have it, these are all the elements needed to create a mock server. Fiddler can load and save your list of rules. Test case automation? Save all requests for a flow to files, mock them and have one request return an error. You’ll get a test suite of number_of_requests x number_of_possible_errors. Managers will love you!

Object Oriented Design

I found this article on OOD very interesting. Not you average GoF bs patterns. And you can impress your friends with names like “The Liskov Substitution Principle”. What more can you ask for?

Later edit:

Seems that these patterns are becoming more and more popular and go by the name of SOLID.

S – Single Responsability Principle

O – Open/Closed Principle

L – Liskov Substitution Principle

I – Interface Segregation Principle

D – Dependency Inversion Principle

Research gone good – Spec Explorer

The problem

Whatever testing technique you choose it does not guarantee that you actually cover what your app can do!

Take for example a mobile application – it’s a collection of screens and you can automate navigation using “coded ui” like technologies, but what if you forget the “back” functionality? Or what if requirements change, and you have to redo the navigation? And requirments will change. That’s about as certain as hidrogen and stupid people.

You will eventually need to change test code to cover the new flows. Then you run into 2 problems:

- Some flows are not obvious (specs are not maintained, you fell asleep in a scrum meeting and other chaotic pleasentries that happen close to the release date). If you forget to remove an old flow, you will get test failures. If you forget to add a new flow, you will not receive a single warning!

- How can you guarantee full coverage of your application ? (not code coverage, but covering all the possible flows )

Introducing Spec Explorer!

For quite some time, Microsoft has been investing in a research project called Spec Explorer. It is available as a free download here and it integrates with your VS.

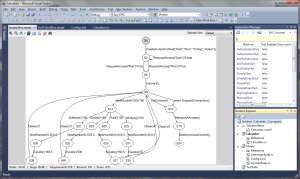

Spec Explorer can be used to define a model as a finite state machine. Here’s a preview:

The way it works is that you define the model using some special constructs – attributes and a special file. Then the tool generates a nice graph like that, which is the finite state machine of an the ideal project. Yup, you have to define your own model that does the same thing as the original, but this is far easier than it sounds. You don’t need a fully blown implementation, just a very simple one to teach Spec Explorer how to draw that graph. The graph is made from:

- nodes – the state of the program (ex: how much money the user has in his account and if he is underage)

- transitions – flow elements, user actions (ex: user buys a video, so the next state has updated money info, or the user cannot buy the video because it is marked adult and he is underage)

While you need to teach Spec Explorer all the business rules, it will generate all possible flows of you app!

Not counting the learning curve, it takes about 5-7 man days to program a model of an application that is reasonably well speced and that would require 8 man months of actual work. Plan for an extra 5 man days of learning, Spec Explorer is pretty abstract despite the nice drawing it does. But the latter is a fixed cost, make sure you suffer it only once. And it is agile-friendly, you can develop parts of your model as features roll out, you don’t need the whole thing at once.

Model and Implementation

So, you have an ideal model, you can build that graph, what next? Here comes the beauty – Spec Explorer can extract all the flows as unit tests. In other words, it will can generate a number tests together cover ALL states and ALL transitions.

Hold on now, these are all based on the ideal model you build, so what’s the purpose? Well, the tests will not call into the ideal model but into the real app. Let’s take an example of a generated test, of an underage teen trying to buy an adult movie:

User teenUser = new User(UserType.Underage); Implementation.GoToMoviesPage(); Implementation.SelectAMovie(); Implementation.BuyMovie(teenUser); Implementation.GoToUnableToBuyDetails();

You are free to add anything you want in those methods. Of course, you should add navigation and asserts. Add them once, Spec Explorer will use them in hundreds of tests! And the beauty of it is that you mathematically cover all flows!

In my next blog post I’ll show how to create a model for a client application.